Apps

Auto Added by WPeMatico

Auto Added by WPeMatico

Tiger Global is in talks to lead a $30 million round in Indian edtech startup Classplus, according to sources familiar with the matter.

The new round, which includes both primary investment and secondary transactions, values the five-year-old Indian startup at over $250 million, two sources told TechCrunch.

The new round follows another ~$30 million investment that was led by GSV recently, one of the sources said. The new round hasn’t closed, so terms may change.

Classplus — which has built a Shopify-like platform for coaching centers to accept fees digitally from students and deliver classes and study material online — also raised $10.3 million in September last year from Falcon Edge’s AWI, cricketer Sourav Ganguly and existing investors RTP Global and Blume Ventures. That round had valued Classplus at about $73 million, according to research firm Tracxn.

Classplus didn’t respond to a request for comment. Sources requested anonymity, as the matter is private.

As tens of millions of students — and their parents — embrace digital learning apps, Classplus is betting that hundreds of thousands of teachers and coaching centers that have gained reputation in their neighborhoods are here to stay.

The startup is serving these hyperlocal tutoring centers that are present in nearly every nook and cranny in India. “Anyone who was born in a middle-class family here has likely attended these tuition classes,” Mukul Rustagi, co-founder and chief executive of Classplus, told TechCrunch last year.

“These are typically small and medium setups that are run by teachers themselves. These teachers and coaching centers are very popular in their locality. They rarely do any marketing and students learn about them through word-of-mouth buzz,” he said then.

Rustagi had described Classplus as “Shopify for coaching centers.” Like Shopify, Classplus does not serve as a marketplace that offers discoverability to these teachers or coaching centers and instead it offers a way for these teachers to leverage its tech platform to engage with customers.

This year, Tiger Global has backed — or in talks to back — about two dozen startups in India.

Powered by WPeMatico

Apple incorporated the announcement of this year’s Apple Design Award winners into its virtual Worldwide Developers Conference (WWDC) online event instead of waiting until the event had wrapped, like last year. Ahead of WWDC, Apple previewed the finalists, whose apps and games showcased a combination of technical achievement, design and ingenuity. This evening, Apple announced the winners across six new award categories.

In each category, Apple selected one app and one game as the winner.

In the Inclusivity category, winners supported people from a diversity of backgrounds, abilities and languages.

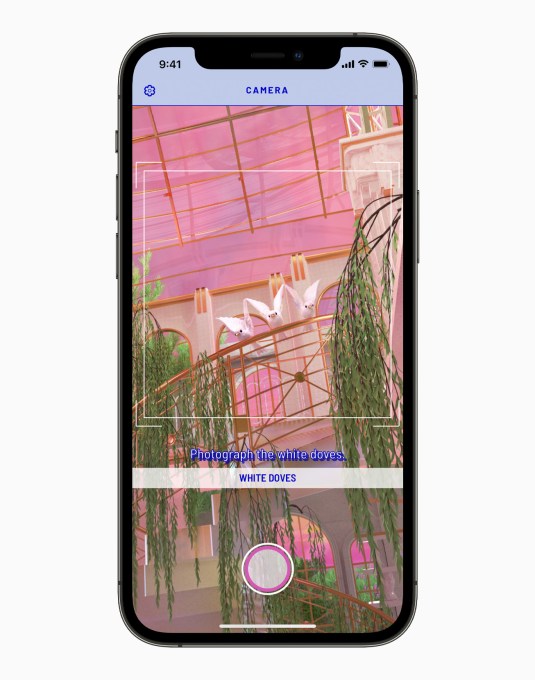

This year, winners included U.S.-based Aconite’s highly accessible game, HoloVista, where users can adjust various options for motion control, text sizes, text contrast, sound and visual effect intensity. In the game, users explore using the iPhone’s camera to find hidden objects, solve puzzles and more. (Our coverage)

Image Credits: Aconite

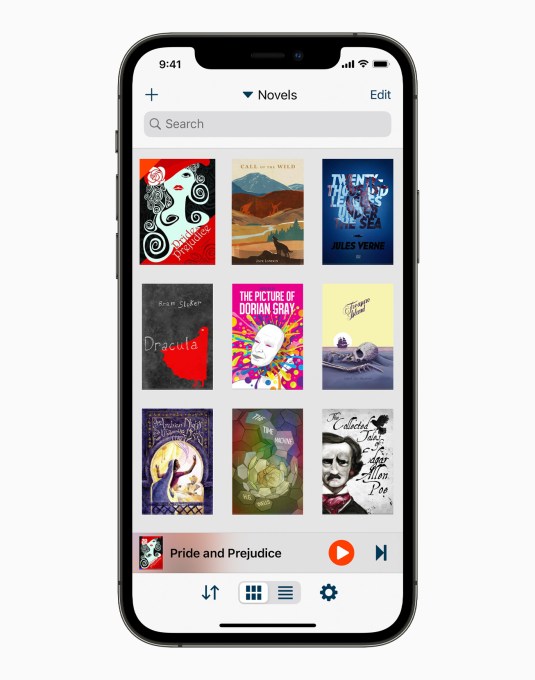

Another winner, Voice Dream Reader, is a text-to-speech app that supports more than two dozen languages and offers adaptive features and a high level of customizable settings.

Image Credits: Voice Dream LLC

In the Delight and Fun category, winners offer memorable and engaging experiences enhanced by Apple technologies. Belgium’s Pok Pok Playroom, a kid entertainment app that spun out of Snowman (Alto’s Adventure series), won for its thoughtful design and use of subtle haptics, sound effects and interactions. (Our coverage)

Image Credits: Pok Pok

Another winner included U.K.s’ Little Orpheus, a platformer that combines storytelling, surprises and fun, and offers a console-like experience in a casual game.

Image Credits: The Chinese Room

The Interaction category winners showcase apps that offer intuitive interfaces and effortless controls, Apple says.

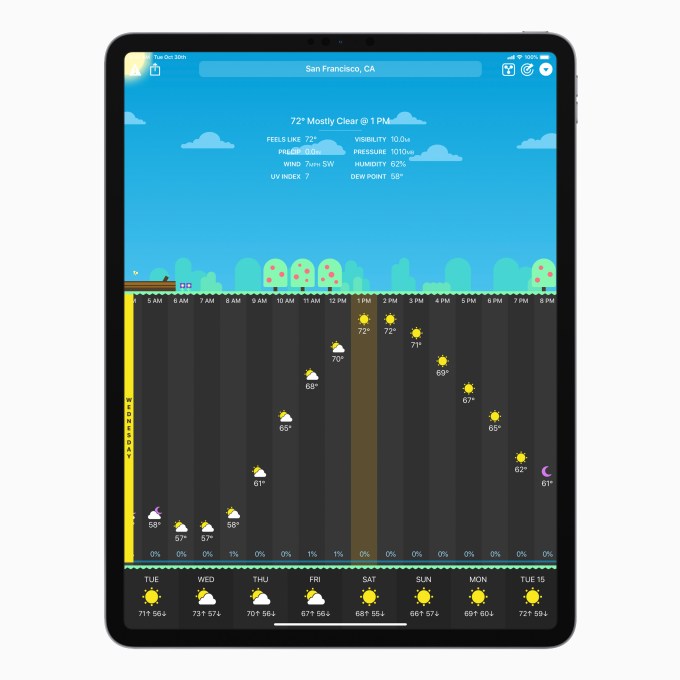

The U.S.-based snarky weather app CARROT Weather won for its humorous forecasts, unique visuals and entertaining experience, which is also available as Apple Watch faces and widgets.

Image Credits: Brian Mueller, Grailr LLC

Canada’s Bird Alone game combines gestures, haptics, parallax and dynamic sound effects in clever ways to brings its world to life.

Image Credits: George Batchelor

A Social Impact category doled out awards to Denmark’s Be My Eyes, which enables people who are blind and low vision to identify objects by pairing them with volunteers from around the world using their camera. Today, it supports more than 300,000 users who are assisted by over 4.5 million volunteers. (Our coverage)

Image Credits: S/I Be My Eyes

U.K.’s ustwo games won in this category for Alba, a game that teaches about respecting the environment as players save wildlife, repair a bridge, clean up trash and more. The game also plants a tree for every download.

Image Credits: ustwo games

The Visuals and Graphics winners feature “stunning imagery, skillfully drawn interfaces, and high-quality animations,” Apple says.

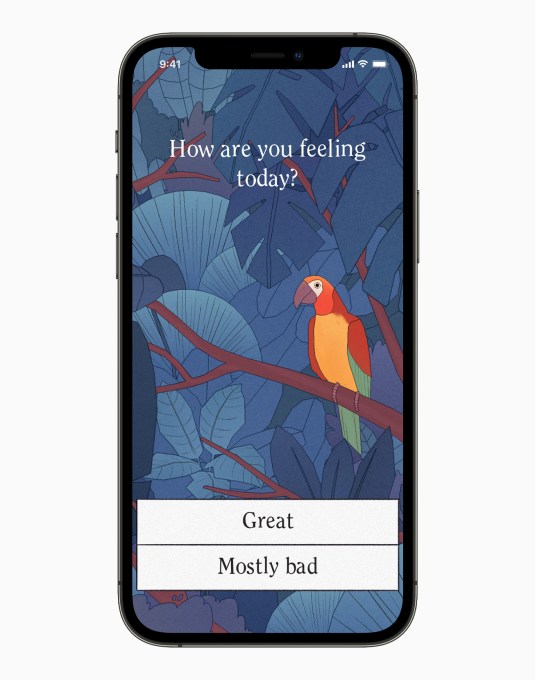

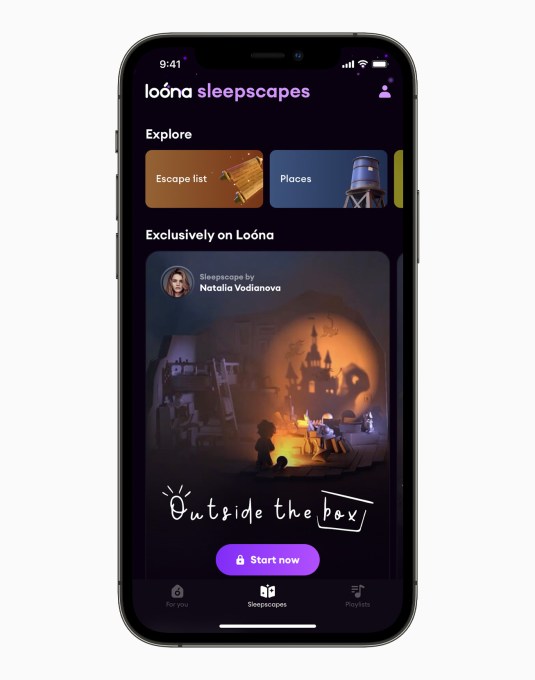

Belarus-based Loóna offers sleepscape sessions, which combine relaxing activities and atmospheric sounds with storytelling to help people wind down at night. The app was recently awarded Google’s “best app” of 2020.

Image Credits: Loóna Inc

China’s Genshin Impact won for pushing the visual frontier on gaming, as motion blur, shadow quality and frame rate can be reconfigured on the fly. The game had previously made Apple’s Best of 2020 list and was Google’s best game of 2020.

Image Credits: miHoYo Limited

Innovation winners included India’s NaadSadhana, an all-in-one, studio-quality music app that helps artists perform and publish. The app uses AI and Core ML to listen and provide feedback on the accuracy of notes, and generates a backing track to match.

Image Credits: Sandeep Ranade

Riot Games’ League of Legends: Wild Rift (U.S.) won for taking a complex PC classic and delivering a full mobile experience that includes touchscreen controls, an auto-targeting system for newcomers and a mobile-exclusive camera setting.

Image Credits: Riot Games

The winners this year will receive a prize package that includes hardware and the award itself.

A video featuring the winners is here on the Apple Developer website.

“This year’s Apple Design Award winners have redefined what we’ve come to expect from a great app experience, and we congratulate them on a well-deserved win,” said Susan Prescott, Apple’s vice president of Worldwide Developer Relations, in a statement. “The work of these developers embodies the essential role apps and games play in our everyday lives, and serve as perfect examples of our six new award categories.”

Powered by WPeMatico

Apple announced a batch of accessibility features at WWDC 2021 that cover a wide variety of needs, among them a few for people who can’t touch or speak to their devices in the ordinary way. With Assistive Touch, Sound Control and other improvements, these folks have new options for interacting with an iPhone or Apple Watch.

We covered Assistive Touch when it was first announced, but recently got a few more details. This feature lets anyone with an Apple Watch operate it with one hand by means of a variety of gestures. It came about when Apple heard from the community of people with limb differences — whether they’re missing an arm, or unable to use it reliably, or anything else — that as much as they liked the Apple Watch, they were tired of answering calls with their noses.

The research team cooked up a way to reliably detect the gestures of pinching one finger to the thumb, or clenching the hand into a fist, based on how doing them causes the watch to move — it’s not detecting nervous system signals or anything. These gestures, as well as double versions of them, can be set to a variety of quick actions. Among them is opening the “motion cursor,” a little dot that mimics the movements of the user’s wrist.

Considering how many people don’t have the use of a hand, this could be a really helpful way to get basic messaging, calling and health-tracking tasks done without needing to resort to voice control.

Speaking of voice, that’s also something not everyone has at their disposal. Many of those who can’t speak fluently, however, can make a bunch of basic sounds, which can carry meaning for those who have learned — not so much Siri. But a new accessibility option called “Sound Control” lets these sounds be used as voice commands. You access it through Switch Control, not audio or voice, and add an audio switch.

The setup menu lets the user choose from a variety of possible sounds: click, cluck, e, eh, k, la, muh, oo, pop, sh and more. Picking one brings up a quick training process to let the user make sure the system understands the sound correctly, and then it can be set to any of a wide selection of actions, from launching apps to asking commonly spoken questions or invoking other tools.

For those who prefer to interact with their Apple devices through a switch system, the company has a big surprise: Game controllers, once only able to be used for gaming, now work for general purposes as well. Specifically noted is the amazing Xbox Adaptive Controller, a hub and group of buttons, switches and other accessories that improves the accessibility of console games. This powerful tool is used by many, and no doubt they will appreciate not having to switch control methods entirely when they’re done with Fortnite and want to listen to a podcast.

One more interesting capability in iOS that sits at the edge of accessibility is Walking Steadiness. This feature, available to anyone with an iPhone, tracks (as you might guess) the steadiness of the user’s walk. This metric, tracked throughout a day or week, can potentially give real insight into how and when a person’s locomotion is better and worse. It’s based on a bunch of data collected in the Apple Heart and Movement study, including actual falls and the unsteady movement that led to them.

If the user is someone who recently was fitted for a prosthesis, or had foot surgery, or suffers from vertigo, knowing when and why they are at risk of falling can be very important. They may not realize it, but perhaps their movements are less steady toward the end of the day, or after climbing a flight of steps, or after waiting in line for a long time. It could also show steady improvements as they get used to an artificial limb or chronic pain declines.

Exactly how this data may be used by an actual physical therapist or doctor is an open question, but importantly it’s something that can easily be tracked and understood by the users themselves.

Among Apple’s other assistive features are new languages for voice control, improved headphone acoustic accommodation, support for bidirectional hearing aids, and of course the addition of cochlear implants and oxygen tubes for memoji. As an Apple representative put it, they don’t want to embrace differences just in features, but on the personalization and fun side as well.

Powered by WPeMatico

This spring, Facebook confirmed it was testing Venmo-like QR codes for person-to-person payments inside its app in the U.S. Today, the company announced those codes are now launching publicly to all U.S. users, allowing anyone to send or request money through Facebook Pay — even if they’re not Facebook friends.

The QR codes work similarly to those found in other payment apps, like Venmo.

The feature can be found under the “Facebook Pay” section in Messenger’s settings, accessed by tapping on your profile icon at the top left of the screen. Here, you’ll be presented with your personalized QR code which looks much like a regular QR code except that it features your profile icon in the middle.

Underneath, you’ll be shown your personal Facebook Pay UR which is in the format of “https://m.me/pay/UserName.” This can also be copied and sent to other users when you’re requesting a payment.

Facebook notes that the codes will work between any U.S. Messenger users, and won’t require a separate payment app or any sort of contact entry or upload process to get started.

Users who want to be able to send and receive money in Messenger have to be at least 18 years old, and will have to have a Visa or Mastercard debit card, a PayPal account or one of the supported prepaid cards or government-issued cards, in order to use the payments feature. They’ll also need to set their preferred currency to U.S. dollars in the app.

After setup is complete, you can choose which payment method you want as your default and optionally protect payments behind a PIN code of your choosing.

The QR code is also available from the Facebook Pay section of the main Facebook app, in a carousel at the top of the screen.

Facebook Pay first launched in November 2019, as a way to establish a payment system that extends across the company’s apps for not just person-to-person payments, but also other features, like donations, Stars and e-commerce, among other things. Though the QR codes take cues from Venmo and others, the service as it stands today is not necessarily a rival to payment apps because Facebook partners with PayPal as one of the supported payment methods.

However, although the payments experience is separate from Facebook’s cryptocurrency wallet, Novi, that’s something that could perhaps change in the future.

Image Credits: Facebook

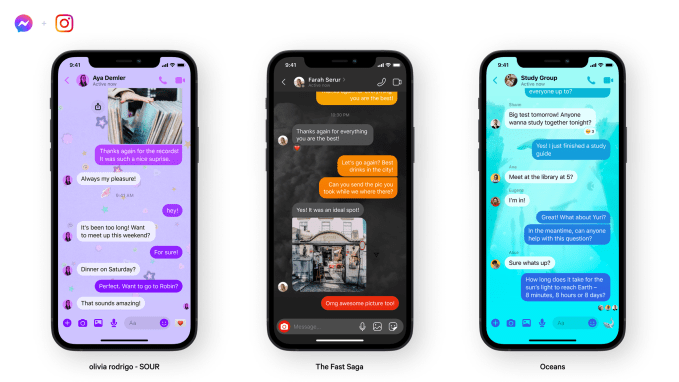

The feature was introduced alongside a few other Messenger updates, including a new Quick Reply bar that makes it easier to respond to a photo or video without having to return to the main chat thread. Facebook also added new chat themes including one for Olivia Rodrigo fans, another for World Oceans Day, and one that promotes the new F9 movie.

Powered by WPeMatico

If you’ve ever bought a subscription inside an iOS app and later decided you wanted to cancel, upgrade or downgrade, or ask for a refund, you may have had trouble figuring out how to go about making that request or change. Some people today still believe that they can stop their subscription charges simply by deleting an app from their iPhone. Others may dig around unsuccessfully inside their iPhone’s Settings or on the App Store to try to find out how to ask for a refund. With the updates Apple announced in StoreKit 2 during its Worldwide Developers Conference this week, things may start to get a little easier for app customers.

StoreKit is Apple’s developer framework for managing in-app purchases — an area that’s become more complex in recent years, as apps have transitioned from offering one-time purchases to ongoing subscriptions with different tiers, lengths and feature sets.

Image Credits: Apple

Currently, users who want to manage or cancel subscriptions can do so from the App Store or their iPhone Settings. But some don’t realize the path to this section from Settings starts by tapping on your Apple ID (your name and profile photo at the top of the screen). They may also get frustrated if they’re not familiar with how to navigate their Settings or the App Store.

Meanwhile, there are a variety of ways users can request refunds on their in-app subscriptions. They can dig in their inbox for their receipt from Apple, then click the “Report a Problem” link it includes to request a refund when something went wrong. This could be useful in scenarios where you’ve bought a subscription by mistake (or your kid has!), or where the promised features didn’t work as intended.

Apple also provides a dedicated website where users can directly request refunds for apps or content. (When you Google for something like “request a refund apple” or similar queries, a page that explains the process typically comes up at the top of the search results.)

Still, many users aren’t technically savvy. For them, the easiest way to manage subscriptions or ask for refunds would be to do so from within the app itself. For this reason, many conscientious app developers tend to include links to point customers to Apple’s pages for subscription management or refunds inside their apps.

But StoreKit 2 is introducing new tools that will allow developers to implement these sort of features more easily.

One new tool is a Manage subscriptions API, which lets an app developer display the manage subscriptions page for their customer directly inside their app — without redirecting the customer to the App Store. Optionally, developers can choose to display a “Save Offer” screen to present the customer with a discount of some kind to keep them from cancelling, or it could display an exit survey so you can ask the customer why they decided to end their subscription.

When implemented, the customer will be able to view a screen inside the app that looks just like the one they’d visit in the App Store to cancel or change a subscription. After canceling, they’ll be shown a confirmation screen with the cancellation details and the service expiration date.

If the customer wants to request a refund, a new Refund request API will allow the customer to begin their refund request directly in the app itself — again, without being redirected to the App Store or other website. On the screen that displays, the customer can select for which item they want a refund and check the reason why they’re making the request. Apple handles the refund process and will send either an approval or refund declined notification back to the developer’s server.

However, some developers argue that the changes don’t go far enough. They want to be in charge of managing customer subscriptions and handling refunds themselves, through programmatic means. Plus, Apple can take up to 48 hours for the customer to receive an update on their refund request, which can be confusing.

“They’ve made the process a bit smoother, but developers still can’t initiate refunds or cancellations themselves,” notes RevenueCat CEO Jacob Eiting, whose company provides tools to app developers to manage their in-app purchases. “It’s a step in the right direction, but could actually lead to more confusion between developers and consumers about who is responsible for issuing refunds.”

In other words, because the forms are now going to be more accessible from inside the app, the customer may believe the developer is handling the refund process when, really, Apple continues to do so.

Some developers pointed out that there are other scenarios this process doesn’t address. For example, if the customer has already uninstalled the app or no longer has the device in question, they’ll still need to be directed to other means of asking for refunds, just as before.

For consumers, though, subscription management tools like this mean more developers may begin to put buttons to manage subscriptions and ask for refunds directly inside their app, which is a better experience. In time, as customers learn they can more easily use the app and manage subscriptions, app developers may see better customer retention, higher engagement, and better App Store reviews, notes Apple.

The StoreKit 2 changes weren’t limited to APIs for managing subscriptions and refunds.

Developers will also gain access to a new Invoice Lookup API that allows them to look up the in-app purchases for the customer, validate their invoice and identify any problems with the purchase — for example, if there were any refunds already provided by the App Store.

A new Refunded Purchases API will allow developers to look up all the refunds for the customer.

A new Renewal Extension API will allow developers to extend the renewal data for paid, active subscriptions in the case of an outage — like when dealing with customer support issues when a streaming service went down, for example. This API lets developers extend the subscription up to twice per calendar year, each up to 90 days in the future.

And finally, a new Consumption API will allow developers to share information about a customer’s in-app purchase with the App Store. In most cases, customers begin consuming content soon after purchase — information that’s helpful in the refund decision process. The API will allow the App Store to see if the user consumed the in-app purchase partially, fully, or not at all.

Another change will help customers when they reinstall apps or download them on new devices. Before, users would have to manually “restore purchases” to sync the status of the completed transactions back to that newly downloaded or reinstalled app. Now, that information will be automatically fetched by StoreKit 2 so the apps are immediately up-to-date with whatever it is the user paid for.

While, overall, the changes make for a significant update to the StoreKit framework, Apple’s hesitancy to allow developers more control over their own subscription-based customers speaks, in part, to how much it wants to control in-app purchases. This is perhaps because it got burned in the past when it tried allowing developers to manage their own refunds.

As The Verge noted last month while the Epic Games-Apple antitrust trial was underway, Apple had once provided Hulu will access to a subscription API, then discovered Hulu had been offering a way to automatically cancel subscriptions made through the App Store when customers wanted to upgrade to higher-priced subscription plans. Apple realized it needed to take action to protect against this misuse of the API, and Hulu later lost access. It has not since made that API more broadly available.

On the flip side, having Apple, not the developers, in charge of subscription management and refunds means Apple takes on the responsibilities around preventing fraud — including fraud perpetrated by both customers and developers alike. Customers may also prefer that there’s one single place to go for managing their subscription billing: Apple. They may not want to have to deal with each developer individually, as their experience would end up being inconsistent.

These changes matter because subscription revenue contributes to a sizable amount of Apple’s lucrative App Store business. Ahead of WWDC 21, Apple reported the sale of digital goods and services on the App Store grew to $86 billion in 2020, up 40% over the the year prior. Earlier this year, Apple said it paid out more than $200 billion to developers since the App Store launched in 2008.

Powered by WPeMatico

BukuWarung, a fintech focused on Indonesia’s micro, small and medium enterprises (MSMEs), announced today it has raised a $60 million Series A. The oversubscribed round was led by Valar Ventures, marking the firm’s first investment in Indonesia, and Goodwater Capital. The Jakarta-based startup claims this is the largest Series A round ever raised by a startup focused on services for MSMEs. BukuWarung did not disclose its valuation, but sources tell TechCrunch it is estimated to be between $225 million to $250 million.

Other participants included returning backers and angel investors like Aldi Haryopratomo, former chief executive officer of payment gateway GoPay, Klarna co-founder Victor Jacobsson and partners from SoftBank and Trihill Capital.

Founded in 2019, BukuWarung’s target market is the more than 60 million MSMEs in Indonesia, according to data from the Ministry of Cooperatives and SMEs. These businesses contribute about 61% of the country’s gross domestic product and employ 97% of its workforce.

BukuWarung’s services, including digital payments, inventory management, bulk transactions and a Shopify-like e-commerce platform called Tokoko, are designed to digitize merchants that previously did most of their business offline (many of its clients started taking online orders during the COVID-19 pandemic). It is building what it describes as an “operating system” for MSMEs and currently claims more than 6.5 million registered merchant in 750 Indonesian cities, with most in Tier 2 and Tier 3 areas. It says it has processed about $1.4 billion in annualized payments so far, and is on track to process over $10 billion in annualized payments by 2022.

BukuWarung’s new round brings its total funding to $80 million. The company says its growth in users and payment volumes has been capital efficient, and that more than 90% of its funds raised have not been spent. It plans to add more MSME-focused financial services, including lending, savings and insurance, to its platform.

BukuWarung’s new funding announcement comes four months after its previous one, and less than a month after competitor BukuKas disclosed it had raised a $50 million Series B. Both started out as digital bookkeeping apps for MSMEs before expanding into financial services and e-commerce tools.

When asked how BukuWarung plans to continue differentiating from BukuKas, co-founder and CEO Abhinay Peddisetty told TechCrunch, “We don’t see this space as a winner takes all, our focus is on building the best products for MSMEs as proven by our execution on our payments and accounting, shown by massive growth in payments TPV as we’re 10x bigger than the nearest player in this space.”

He added, “We have already run successful lending experiments with partners in fintech and banks and are on track to monetize our merchants backed by our deep payments, accounting and other data that we collect.”

BukuWarung’s new funding will be used to double its current workforce of 150, located in Indonesia, Singapore and India, to 300 and develop BukuWarung’s accounting, digital payments and commerce products, including a payments infrastructure that will include QR payments and other services.

Powered by WPeMatico

With the upcoming release of iOS 15 for Apple mobile devices, Apple’s built-in search feature known as Spotlight will become a lot more functional. In what may be one of its bigger updates since it introduced Siri Suggestions, the new version of Spotlight is becoming an alternative to Google for several key queries, including web images and information about actors, musicians, TV shows and movies. It will also now be able to search across your photo library, deliver richer results for contacts, and connect you more directly with apps and the information they contain. It even allows you to install apps from the App Store without leaving Spotlight itself.

Spotlight is also more accessible than ever before.

Years ago, Spotlight moved from its location to the left of the Home screen to become available with a swipe down in the middle of any screen in iOS 7, which helped grow user adoption. Now, it’s available with the same swipe-down gesture on the iPhone’s Lock Screen, too.

Apple showed off a few of Spotlight’s improvements during its keynote address at its Worldwide Developers Conference, including the search feature’s new cards for looking up information on actors, movies and shows, as well as musicians. This change alone could redirect a good portion of web searches away from Google or dedicated apps like IMDb.

For years, Google has been offering quick access to common searches through its Knowledge Graph, a knowledge base that allows it to gather information from across sources and then use that to add informational panels above and the side of its standard search results. Panels on actors, musicians, shows and movies are available as part of that effort.

But now, iPhone users can just pull up this info on their home screen.

The new cards include more than the typical Wikipedia bio and background information you may expect — they also showcase links to where you can listen or watch content from the artist or actor or movie or show in question. They include news articles, social media links, official websites and even direct you to where the searched person or topic may be found inside your own apps (e.g. a search for “Billie Eilish” may direct you to her tour tickets inside SeatGeek, or a podcast where she’s a guest).

Image Credits: Apple

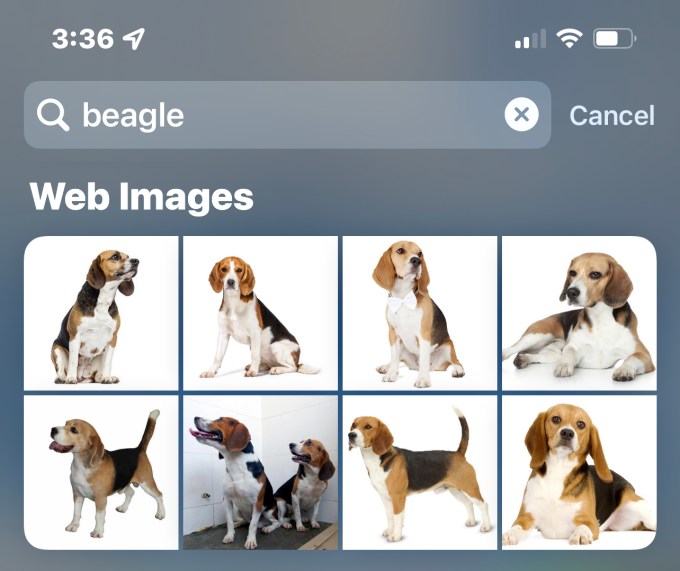

For web image searches, Spotlight also now allows you to search for people, places, animals and more from the web — eating into another search vertical Google today provides.

Image Credits: iOS 15 screenshot

Your personal searches have been upgraded with richer results, too, in iOS 15.

When you search for a contact, you’ll be taken to a card that does more than show their name and how to reach them. You’ll also see their current status (thanks to another iOS 15 feature), as well as their location from FindMy, your recent conversations on Messages, your shared photos, calendar appointments, emails, notes and files. It’s almost like a personal CRM system.

Image Credits: Apple

Personal photo searches have also been improved. Spotlight now uses Siri intelligence to allow you to search your photos by the people, scenes and elements in your photos, as well as by location. And it’s able to leverage the new Live Text feature in iOS 15 to find the text in your photos to return relevant results.

This could make it easier to pull up photos where you’ve screenshot a recipe, a store receipt, or even a handwritten note, Apple said.

Image Credits: Apple

A couple of features related to Spotlight’s integration with apps weren’t mentioned during the keynote.

Spotlight will now display action buttons on the Maps results for businesses that will prompt users to engage with that business’s app. In this case, the feature is leveraging App Clips, which are small parts of a developer’s app that let you quickly perform a task even without downloading or installing the app in question. For example, from Spotlight you may be prompted to pull up a restaurant’s menu, buy tickets, make an appointment, order takeout, join a waitlist, see showtimes, pay for parking, check prices and more.

The feature will require the business to support App Clips in order to work.

Image Credits: iOS 15 screenshot

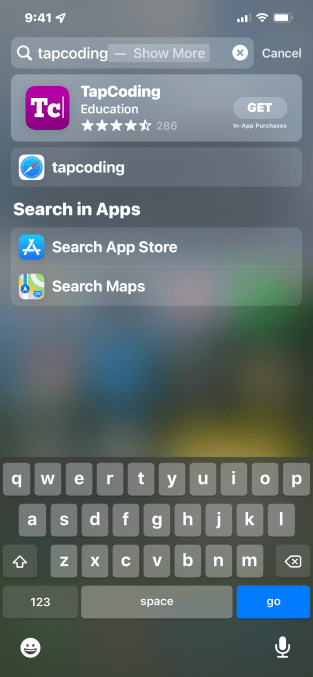

Another under-the-radar change — but a significant one — is the new ability to install apps from the App Store directly from Spotlight.

This could prompt more app installs, as it reduces the steps from a search to a download, and makes querying the App Store more broadly available across the operating system.

Developers can additionally choose to insert a few lines of code to their app to make data from the app discoverable within Spotlight and customize how it’s presented to users. This means Spotlight can work as a tool for searching content from inside apps — another way Apple is redirecting users away from traditional web searches in favor of apps.

However, unlike Google’s search engine, which relies on crawlers that browse the web to index the data it contains, Spotlight’s in-app search requires developer adoption.

Still, it’s clear Apple sees Spotlight as a potential rival to web search engines, including Google’s.

“Spotlight is the universal place to start all your searches,” said Apple SVP of Software Engineering Craig Federighi during the keynote event.

Spotlight, of course, can’t handle “all” your searches just yet, but it appears to be steadily working towards that goal.

Powered by WPeMatico

Apple in 2018 closed its $400 million acquisition of music recognition app Shazam. Now, it’s bringing Shazam’s audio recognition capabilities to app developers in the form of the new ShazamKit. The new framework will allow app developers — including those on both Apple platforms and Android — to build apps that can identify music from Shazam’s huge database of songs, or even from their own custom catalog of pre-recorded audio.

Many consumers are already familiar with the mobile app Shazam, which lets you push a button to identify what song you’re hearing, and then take other actions — like viewing the lyrics, adding the song to a playlist, exploring music trends, and more. Having first launched in 2008, Shazam was already one of the oldest apps on the App Store when Apple snatched it up.

Now the company is putting Shazam to better use than being just a music identification utility. With the new ShazamKit, developers will now be able to leverage Shazam’s audio recognition capabilities to create their own app experiences.

There are three parts to the new framework: Shazam catalog recognition, which lets developers add song recognition to their apps; custom catalog recognition, which performs on-device matching against arbitrary audio; and library management.

Shazam catalog recognition is what you probably think of when you think of the Shazam experience today. The technology can recognize the song that’s playing in the environment and then fetch the song’s metadata, like the title and artist. The ShazamKit API will also be able to return other metadata like genre or album art, for example. And it can identify where in the audio the match occurred.

When matching music, Shazam doesn’t actually match the audio itself, to be clear. Instead, it creates a lossy representation of it, called a signature, and matches against that. This method greatly reduces the amount of data that needs to be sent over the network. Signatures also cannot be used to reconstruct the original audio, which protects user privacy.

The Shazam catalog comprises millions of songs and is hosted in cloud and maintained by Apple. It’s regularly updated with new tracks as they become available.

When a customer uses a developer’s third-party app for music recognition via ShazamKit, they may want to save the song in their Shazam library. This is found in the Shazam app, if the user has it installed, or it can be accessed by long pressing on the music recognition Control Center module. The library is also synced across devices.

Apple suggests that apps make their users aware that recognized songs will be saved to this library, as there’s no special permission required to write to the library.

Image Credits: Apple

ShazamKit’s custom catalog recognition feature, meanwhile, could be used to create synced activities or other second-screen experiences in apps by recognizing the developer’s audio, not that from the Shazam music catalog.

This could allow for educational apps where students follow along with a video lesson, where some portion of the lesson’s audio could prompt an activity to begin in the student’s companion app. It could also be used to enable mobile shopping experiences that popped up as you watched a favorite TV show.

ShazamKit is current in beta on iOS 15.0+, macOS 12.0+, Mac Catalyst 15.0+, tvOS 15.0+, and watchOS 8.0+. On Android, ShazamKit comes in the form of an Android Archive (AAR) file and supports music and custom audio, as well.

Powered by WPeMatico

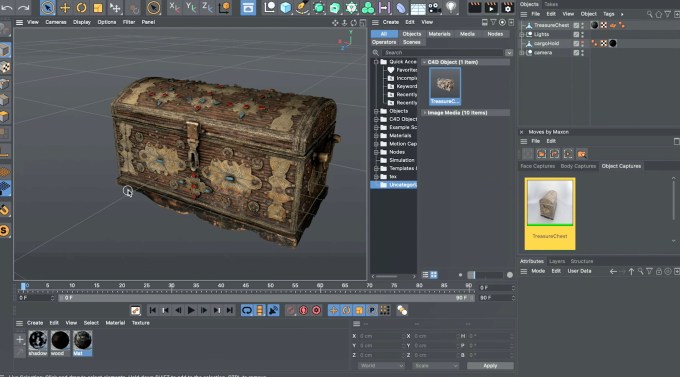

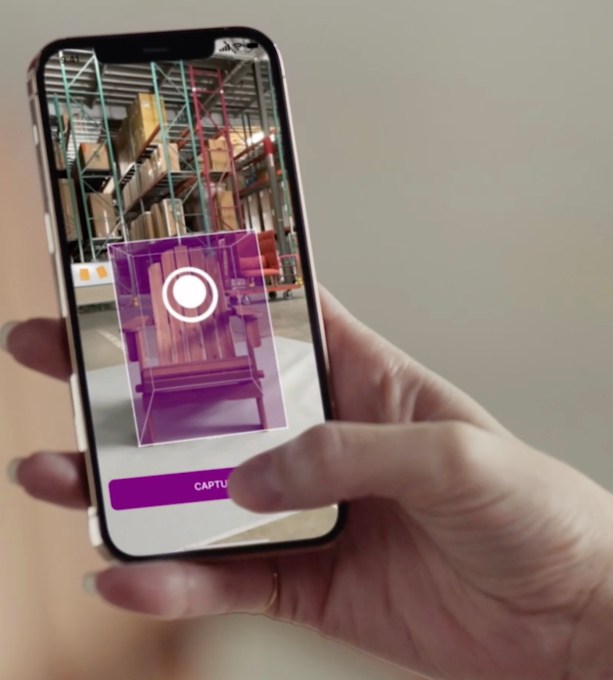

At its Worldwide Developers Conference, Apple announced a significant update to RealityKit, its suite of technologies that allow developers to get started building AR (augmented reality) experiences. With the launch of RealityKit 2, Apple says developers will have more visual, audio and animation control when working on their AR experiences. But the most notable part of the update is how Apple’s new Object Capture API will allow developers to create 3D models in minutes using only an iPhone.

Apple noted during its developer address that one of the most difficult parts of making great AR apps was the process of creating 3D models. These could take hours and thousands of dollars.

With Apple’s new tools, developers will be able take a series of pictures using just an iPhone (or iPad, DSLR or even a drone, if they prefer) to capture 2D images of an object from all angles, including the bottom.

Then, using the Object Capture API on macOS Monterey, it only takes a few lines of code to generate the 3D model, Apple explained.

Image Credits: Apple

To begin, developers would start a new photogrammetry session in RealityKit that points to the folder where they’ve captured the images. Then, they would call the process function to generate the 3D model at the desired level of detail. Object Capture allows developers to generate the USDZ files optimized for AR Quick Look — the system that lets developers add virtual, 3D objects in apps or websites on iPhone and iPad. The 3D models can also be added to AR scenes in Reality Composer in Xcode.

Apple said developers like Wayfair, Etsy and others are using Object Capture to create 3D models of real-world objects — an indication that online shopping is about to get a big AR upgrade.

Wayfair, for example, is using Object Capture to develop tools for their manufacturers so they can create a virtual representation of their merchandise. This will allow Wayfair customers to be able to preview more products in AR than they could today.

Image Credits: Apple (screenshot of Wayfair tool))

In addition, Apple noted developers including Maxon and Unity are using Object Capture for creating 3D content within 3D content creation apps, such as Cinema 4D and Unity MARS.

Other updates in RealityKit 2 include custom shaders that give developers more control over the rendering pipeline to fine tune the look and feel of AR objects; dynamic loading for assets; the ability to build your own Entity Component System to organize the assets in your AR scene; and the ability to create player-controlled characters so users can jump, scale and explore AR worlds in RealityKit-based games.

One developer, Mikko Haapoja of Shopify, has been trying out the new technology (see below) and shared some real-world tests where he shot objects using an iPhone 12 Max via Twitter.

Developers who want to test it for themselves can leverage Apple’s sample app and install Monterey on their Mac to try it out. They can use the Qlone camera app or any other image capturing application they want to download from the App Store to take the photos they need for Object Capture, Apple says. In the fall, the Qlone Mac companion app will leverage the Object Capture API as well.

Apple says there are over 14,000 ARKit apps on the App Store today, which have been built by over 9,000 different developers. With the more than 1 billion AR-enabled iPhones and iPads being used globally, it notes that Apple offers the world’s largest AR platform.

Powered by WPeMatico

Today’s WWDC keynote from Apple covered a huge range of updates. From a new macOS to a refreshed watchOS to a new iOS, better privacy controls, FaceTime updates, and even iCloud+, there was something for everyone in the laundry list of new code.

Apple’s keynote was essentially what happens when the big tech companies get huge; they have so many projects that they can’t just detail a few items. They have to run down their entire parade of platforms, dropping packets of news concerning each.

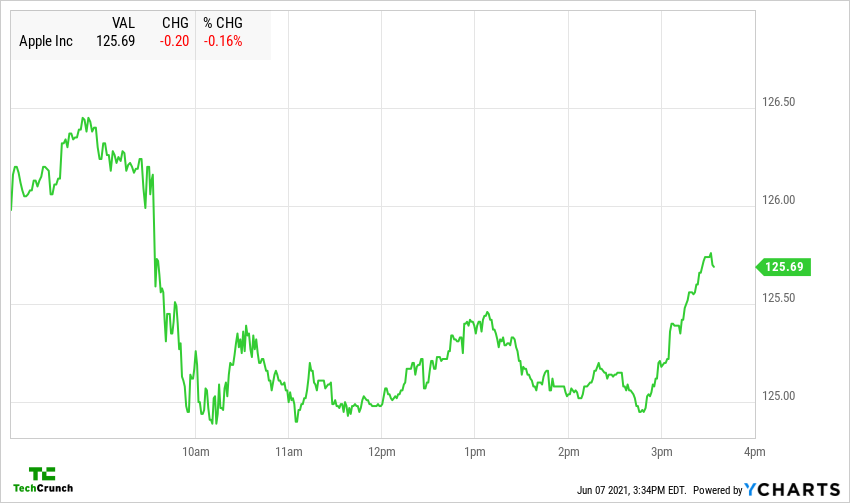

But despite the obvious indication that Apple has been hard at work on the critical software side of its business, especially its services-side (more here), Wall Street gave a firm, emphatic shrug.

This is standard but always slightly confusing.

Investors care about future cash flows, at least in theory. Those future cash flows come from anticipated revenues, which are born from product updates, driving growth in sales of services, software, and hardware. Which, apart from the hardware portion of the equation, is precisely what Apple detailed today.

And lo, Wall Street looked upon the drivers of its future earnings estimates, and did sayeth “lol, who really cares.”

Shares of Apple were down a fraction for most of the day, picking up as time passed not thanks to the company’s news dump, but because the Nasdaq largely rose as trading raced to a close.

Here’s the Apple chart, via YCharts:

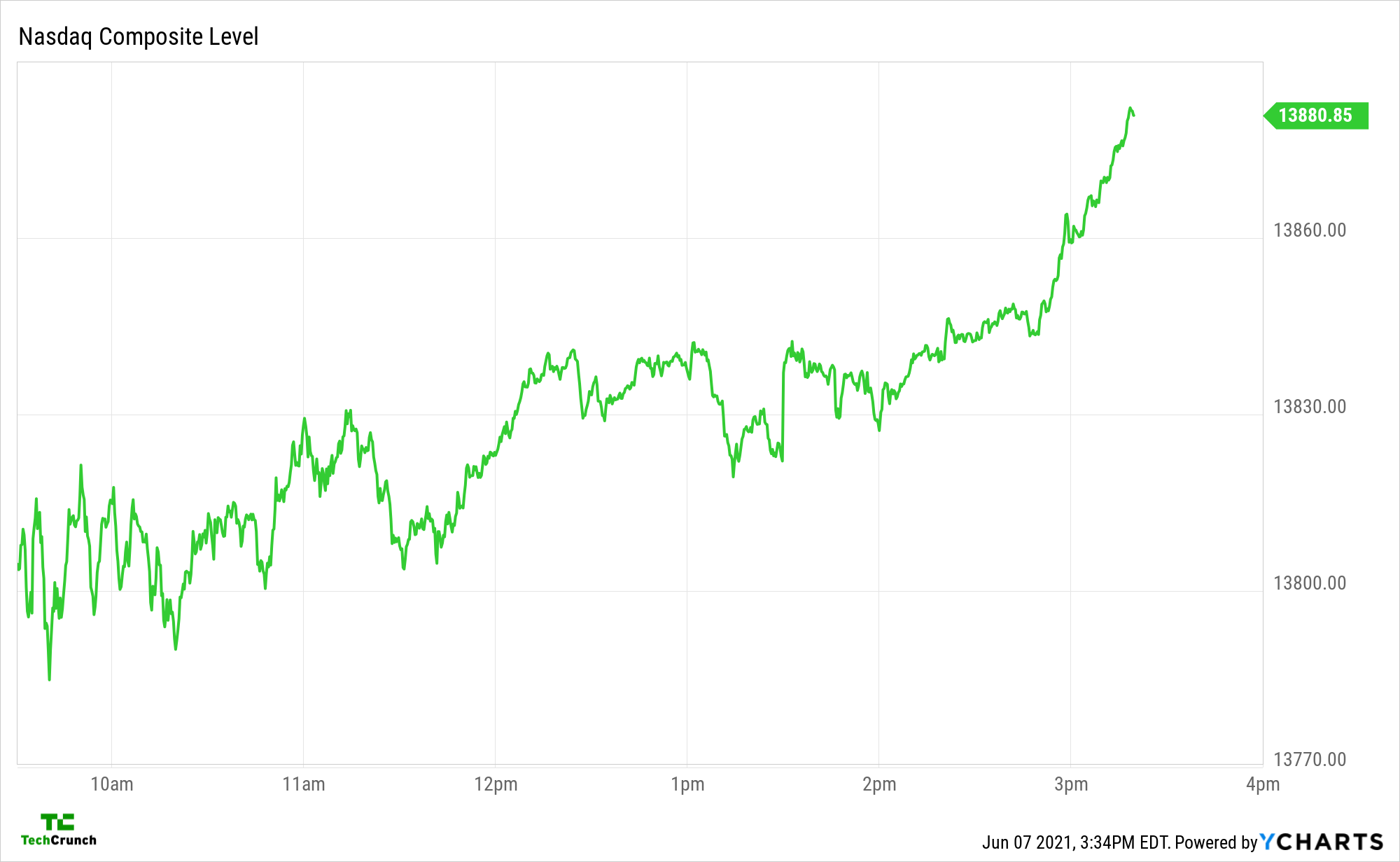

And here’s the Nasdaq:

Presuming that you are not a ChartMaster , those might not mean much to you. Don’t worry. The charts say very little all-around so you are missing little. Apple was down a bit, and the Nasdaq up a bit. Then the Nasdaq went up more, and Apple’s stock generally followed. Which is good to be clear, but somewhat immaterial.

, those might not mean much to you. Don’t worry. The charts say very little all-around so you are missing little. Apple was down a bit, and the Nasdaq up a bit. Then the Nasdaq went up more, and Apple’s stock generally followed. Which is good to be clear, but somewhat immaterial.

So after yet another major Apple event that will help determine the health and popularity of every Apple platform — key drivers of lucrative hardware sales! — the markets are betting that all their prior work estimating the True and Correct value of Apple was dead-on and that there is no need for any sort of up-or-down change.

That, or Apple is so big now that investors are simply betting it will grow in keeping with GDP. Which would be a funny diss. Regardless, more from the Apple event here in case you are behind.

Powered by WPeMatico