Facebook fights fake news with author info, rolls out publisher context

Red flags and “disputed” tags just entrenched people’s views about suspicious news articles, so Facebook is hoping to give readers a wide array of info so they can make their own decisions about what’s misinformation. Facebook will try showing links to a journalist’s Wikipedia entry, other articles, and a follow button to help users make up their mind about whether they’re a legitimate source of news. The test will show up to a subset of users in the U.S. when users click on the author’s name within an Instant Article if the author’s publisher has implemented Facebook’s author tags.

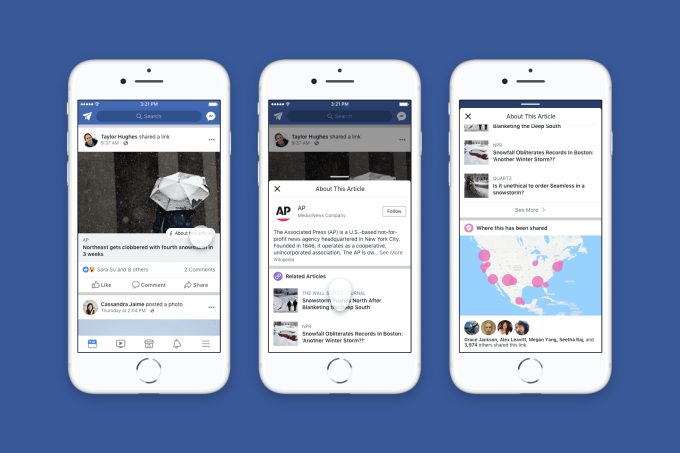

Meanwhile, Facebook is rolling out to everyone in the U.S. its test from October that gives readers more context about publications by showing links to their Wikipedia pages, related articles about the same topic, how many times the article has been shared and where, and a button for following the publisher within an “About This Article” button. Facebook will also start to show whether friends have shared the article, and a a snapshot of the publisher’s other recent articles.

Since much of this context can be algorithmically generated rather than relying on human fact checkers, the system could scale much more quickly to different languages and locations around the world.

These moves are designed to feel politically neutral to prevent Facebook from being accused of bias. After former contractors reported that they suppressed conservative Trending topics on Facebook in 2016, Facebook took a lot of heat for supposed liberal bias. That caused it to hesitate when fighting fake news before the 2016 Presidential election…and then spend the next two years dealing with the backlash for allowing misinformation to run rampant.

Newsroom: Article Context Launch Video

Posted by Facebook on Monday, April 2, 2018

Facebook’s partnerships with outside fact checkers that saw red Disputed flags added to debunked articles actually backfired. Those sympathetic to the false narrative saw the red flag as a badge of honor, clicking and sharing any way rather than allowing someone else to tell them they’re wrong.

That’s why today’s rollout and new test never confront users directly about whether an article, publisher, or author is propagating fake news. Instead Facebook hopes to build a wall of evidence as to whether a source is reputable or not.

If other publications have similar posts, the publisher or author have well-established Wikipedia articles to back up their integrity, and if the publisher’s other articles look legit, users could draw their own conclusion that they’re worth beleiving. But if there’s no Wikipedia links, other publications are contradicting them, no friends have shared it, and a publisher or author’s other articles look questionable too, Facebook might be able to incept the idea that the reader should be skeptical.

Powered by WPeMatico